Scan QRCode

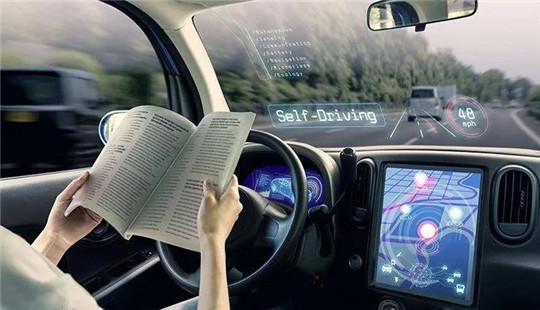

At a time when assisted driving at L2 level is not yet fully developed, we should always remember that "people" are the highest decision-makers.

June 1, Beijing time, should have smoothly ushered in the "Children's Day", but we were attracted by a traffic accident from across the channel video.The reason the latter can be found on all social media platforms, and has sparked such a heated debate, may be because the tesla Model 3 is currently in the hot seat of public opinion.The accident occurred while the Autopilot assisted driving system was on.

According to video footage, the accident happened at 6:36 a.m. on national Highway 1 in Taiwan, China, when a white van overturned on the inside of the road, almost across the middle of the road.At that moment, A speeding white Tesla Model 3 makes A beheading straight into the overturned white van, with the front of the former nearly all the way to the A-column.

Fortunately, the truck was supposed to be carrying a soft, creamy texture, so there was plenty of room for the Tesla Model 3.Although the front of the car was partially damaged, the driver was unhurt.In addition, footage from the high level road camera in the video shows the Model 3 braking fully before approaching the truck, but still hitting the rolled-over vehicle at a high speed, even pushing the container vehicle back a few meters.In addition, the driver of the truck was standing in front of the road before the accident, waving his hand for the attention of passing vehicles, but it did not seem to serve as a warning.

In the end, the specific local police announced the accident, according to the tesla driver Mr Huang said, his Model 3 in Autopilot open automated driving condition, the speed of the crash is about 110 kilometers per hour, and he saw the truck is hard to hit the brakes, but regardless of the braking time and braking distance is not enough, that crashed into a truck roof.Police also tested Huang for alcohol after the accident, which showed no evidence of duI.

At this point, it is clear that the main cause of Tesla's "near-death experience" was a fatal BUG in its Autopilot assisted driving system.At the same time, the accident in Taiwan reminds us of two similar accidents in the United States a few years ago.The difference is that both accidents ended up with the driver killed.

In May 2016, Joshua Brown, a 40-year-old Florida man, was driving a Tesla Model S that was turned on by Autopilot when it hit a white semi-truck in the middle of the road. The driver was killed when the car's front end was "cut off."It is also the first fatal accident caused by Tesla's Autopilot.

Three years later, in March 2019, a Tesla Model 3 was still in Florida when it crashed into a white tower-truck slowly crossing the road at 110km/h.The Model 3 was also in Autopilot mode, and neither the driver nor Autopilot made any evasive action. The car was cut off, killing the 50-year-old man.

The fatal accidents have renewed serious doubts about the reliability of Tesla's autopilot system among users and the industry.One reason for Tesla's subsequent break with Mobileye may also have been the severe fallout from both incidents.And under intense public pressure, Tesla has since revised its description of Autopilot to place less emphasis on "autonomous driving."

In fact, after careful observation of several accidents that occurred in succession within a few years, it is not difficult to find that the three have in common the following characteristics: the crashed vehicles are almost all stationary or moving at a slow speed; there are large white areas on the bodies of the crashed vehicles; and the Tesla owners turned on the Autopilot assisted driving system at the time of the accident.

This is enough to prove that tesla's assisted driving system in the face of this particular situation, there is an irreversible vulnerability, and the reasons behind this need to be divided into two aspects.First, on the hardware side, Tesla's current Autopilot assisted driving and even higher-level FSD system is known to rely on the same vision solution: eight cameras, 12 ultrasonic sensors and an enhanced millimeter-wave radar.

This combination, on the perceptual level, is inherently flawed.The root of the problem is that cameras, no matter how many cameras there are, are good at recognizing dynamic objects, but less good at dealing with static ones, especially those of various shapes.In addition to the camera, MMW radar also has a limited role in the face of static objects, because its hardware features are to measure speed and distance, and its recognition ability for static obstacles with complex shapes is not high.

As a result, the front camera of the Tesla Model 3 in the Taiwan accident faced a large white area of the van, and the early morning sun reflected strongly, making it almost impossible to identify its valid external features.After the camera "failed," the vehicle's millimeter-wave radar was similarly unable to pinpoint its location, so its Autopilot system acquiesces that the road ahead is clear and continues to drive at a set speed, ultimately leading to tragedy.

In fact, before the accident, Li Xiang, CEO of Ideal Automobile, had expressed his opinion on a social platform, "The combination of camera and millimeter-wave radar is like the eyes of a frog. It is good at judging dynamic objects, but almost incapable of judging non-standard static objects.Visual progress at this level is almost stalled, and the recognition rate outside the vehicle, even in motion, is less than 80 percent, so don't really use it on autopilot."

Indeed, as Li Xiang said, no matter Tesla or many new forces in China who take L2 level as the highlight of their products to build cars, their systems may not be able to cope with such situations due to the inherent lack of hardware.So how to solve the problem?

After consulting relevant engineers in the field of autonomous driving, the reporter learned that in terms of hardware, in order to minimize the occurrence of all kinds of strange accidents, lidar and high-precision maps have become the best solutions.However, from the perspective of Tesla's autopilot technology, for the former, which always takes extreme cost control as the premise, it is almost impossible to carry the laser radar with high cost at the present stage.And there are related sources, the current tesla internal focus on high precision maps is still not enough.

Another cause of the accident is Tesla's algorithm.In other words, although tesla does have a congenital defect in its hardware, the potential of the current sensor scheme can be further stimulated through sufficient sample training of the algorithm in the following days and continuous improvement in the computing power of the self-driving chip.For example, an OTA update of Tesla's assisted driving system already recognizes ice cream cones at rest, even when there are "hiccups" in the process.

Looking back at the accident, Tesla apparently had no data training for this particular scenario to identify a white van lying across the road.When a reporter asked an engineer in the field of visual processing for his opinion, she said: "Such extreme cases are really a blind spot in the data collection process. There is very little data encountered in this type, so there is no way to trigger the emergency mechanism."

So can Tesla solve such problems through redundant algorithms in the future?The answer may be yes.However, the premise is that it is willing to spend enough time, equipment and cost to set up the site, invest more research and development efforts, collect specific algorithm data under similar accidents, and finally make the vehicle have corresponding processing capacity through OTA upgrade.

Finally, in addition to "reprimanding" Tesla, everyone must be informed of one point. According to the current regulations, if the vehicle has an accident during the opening stage of L2 assisted driving system, the ultimate responsibility remains with the driver, and the main engine factories that provide auxiliary driving after the accident are exempted from liability.

Watching the video, it is not difficult to find that the other cause of the accident is the driver's distraction during driving. If he can focus on driving, find the obstacles in front of him in advance, take over the vehicle and leave enough braking distance, the tragedy can be completely avoided.After all, at a time when assisted driving at L2 is not yet fully developed, we should always remember that "people" are the top decision-makers.

AMS2024 Exhibition Guide | Comprehensive Exhibition Guide, Don't Miss the Exciting Events Online and Offline

Notice on Holding the Rui'an Promotion Conference for the 2025 China (Rui'an) International Automobile and Motorcycle Parts Exhibition

On September 5th, we invite you to join us at the Wenzhou Auto Parts Exhibition on a journey to trace the origin of the Auto Parts City, as per the invitation from the purchaser!

Hot Booking | AAPEX 2024- Professional Exhibition Channel for Entering the North American Auto Parts Market

The wind is just right, Qianchuan Hui! Looking forward to working with you at the 2024 Wenzhou Auto Parts Exhibition and composing a new chapter!

Live up to Shaohua | Wenzhou Auto Parts Exhibition, these wonderful moments are worth remembering!

Free support line!

Email Support!

Working Days/Hours!